South Korea’s New AI Law: Innovation First, Regulation Second

South Korea has officially become the first country in Asia-Pacific to enact comprehensive national AI legislation. The Framework Act on Artificial Intelligence was passed in December 2024 and took effect last January 22, 2026.

Despite public framing as "balanced," the reality tells a different story. South Korea's AI law is substantially penalty-heavy with significant operational requirements that will reshape how businesses deploy AI in the Korean market-and what many companies don't yet realise is that compliance violations stack quickly and expensive.

The Real Cost: Understanding South Korea's Penalty Structure

While headlines focus on comparing Korea's fines to the EU AI Act, a critical detail gets buried: South Korea imposes penalties of KRW 30 million (approximately USD $20,500) per each violation incident. This is not a single fine per company or per system. Each failure to notify users, each unmanaged high-impact AI system, each missed compliance requirement triggers a separate penalty.

For a company operating multiple AI systems across different service lines, violations compound rapidly. A single audit could uncover dozens of incidents-each carrying a separate $20,000+ fine. For Korean businesses and foreign operators serving the Korean market, this represents a material financial exposure that grows with scale.

The enforcement mechanism is also unambiguous: the Ministry of Science and ICT (MSIT) can investigate non-compliance, demand corrective action and impose these fines without delay. Criminal penalties for leaking confidential business information during compliance investigations can result in up to three years imprisonment.

Who Must Comply?

The law applies to any organisation whose AI systems impact Korean users or markets-whether or not the company has a physical presence in Korea. If you offer AI services to Koreans, you're in scope.

Foreign companies meeting certain thresholds must appoint a "domestic representative" in Korea to manage compliance on their behalf. This requirement triggers if your organisation meets any of the following:

- Annual revenue exceeding 1 trillion won (~USD $750M)

- AI service-specific revenue over 10 billion won (~USD $7.5M)

- Average daily Korean users exceeding 1 million

- Prior notice of serious safety incident compliance failures

The appointment of this domestic representative is itself a legal obligation-failure to appoint one is a standalone violation carrying a separate KRW 30 million penalty.

The Operational Burden: High-Impact AI Requirements

High-impact AI systems-those affecting safety, healthcare, hiring, lending, biometric analysis, education, energy, or other critical sectors-face strict obligations.

Organisations deploying high-impact AI must:

- Establish and maintain a risk management plan documenting all safety measures, monitoring protocols and incident response procedures

- Provide explainability of AI decisions, including key criteria and training data summaries, in a form understandable to users

- Implement user protection mechanisms including complaint-handling and redress procedures

- Ensure mandatory human oversight, with documented proof that qualified personnel review and approve AI outputs in sensitive contexts

- Conduct fundamental rights impact assessments evaluating potential effects on human rights, safety and societal impact

- Maintain comprehensive documentation of all compliance measures for a minimum of five years, subject to government inspection

Each of these requirements is operationally complex and resource-intensive. Risk management alone requires continuous monitoring, documentation and reporting. Human oversight demands trained personnel embedded in workflows. Impact assessments require domain expertise and potentially external consultation.

Failure to implement any of these measures-or failure to demonstrate implementation during an MSIT audit-constitutes a separate violation.

The Generative AI Disclosure Mandate

Every interaction with generative AI must be disclosed to users. Every output generated by AI must be labeled as such. Synthetic content (images, video, audio) created by AI must be clearly identified.

This requirement sounds straightforward in policy. In practice, it demands integration across your entire product stack: labeling systems, user interface changes, documentation and ongoing audits to ensure no AI-generated content escapes without proper disclosure. Each failure to label or disclose is a separate violation.

The Domestic Representative Trap

For foreign operators, appointing a domestic representative creates an ongoing compliance liability. The domestic representative bears responsibility for:

- Submitting proof of risk management system implementation

- Requesting and obtaining MSIT confirmation for high-impact AI classification

- Ensuring safety and reliability measures are operationally deployed

- Maintaining and submitting required documentation

Critically, the parent company is held fully liable if the domestic representative fails to comply. This means outsourcing compliance responsibility does not distribute risk-it concentrates it. A single compliance failure by your Korean representative becomes your company's violation, triggering the same penalties.

Why This Law Is Scarier Than It Looks

The perception that Korea's fines are "lighter" than the EU's 7% of global turnover creates dangerous complacency. In reality:

- Penalties accumulate: Multiple violations within a single audit trigger multiple KRW 30 million fines

- Scope is broad: Every AI system affecting Korean users falls within the law's reach, including third-party integrations and legacy systems

- Documentation burden is heavy: Five years of documentation required; failure to produce evidence of compliance during an investigation constitutes non-compliance

- The extraterritorial reach is unlimited: Foreign companies with no Korean office still must comply if they serve Korean users

- Enforcement has begun: The MSIT has already begun issuing guidance and will launch investigations upon the January 22, 2026 effective date

The Solution: On-Premises Compliance with Full Audit Visibility

Building AI compliance from scratch is operationally and financially prohibitive. But there's an alternative.

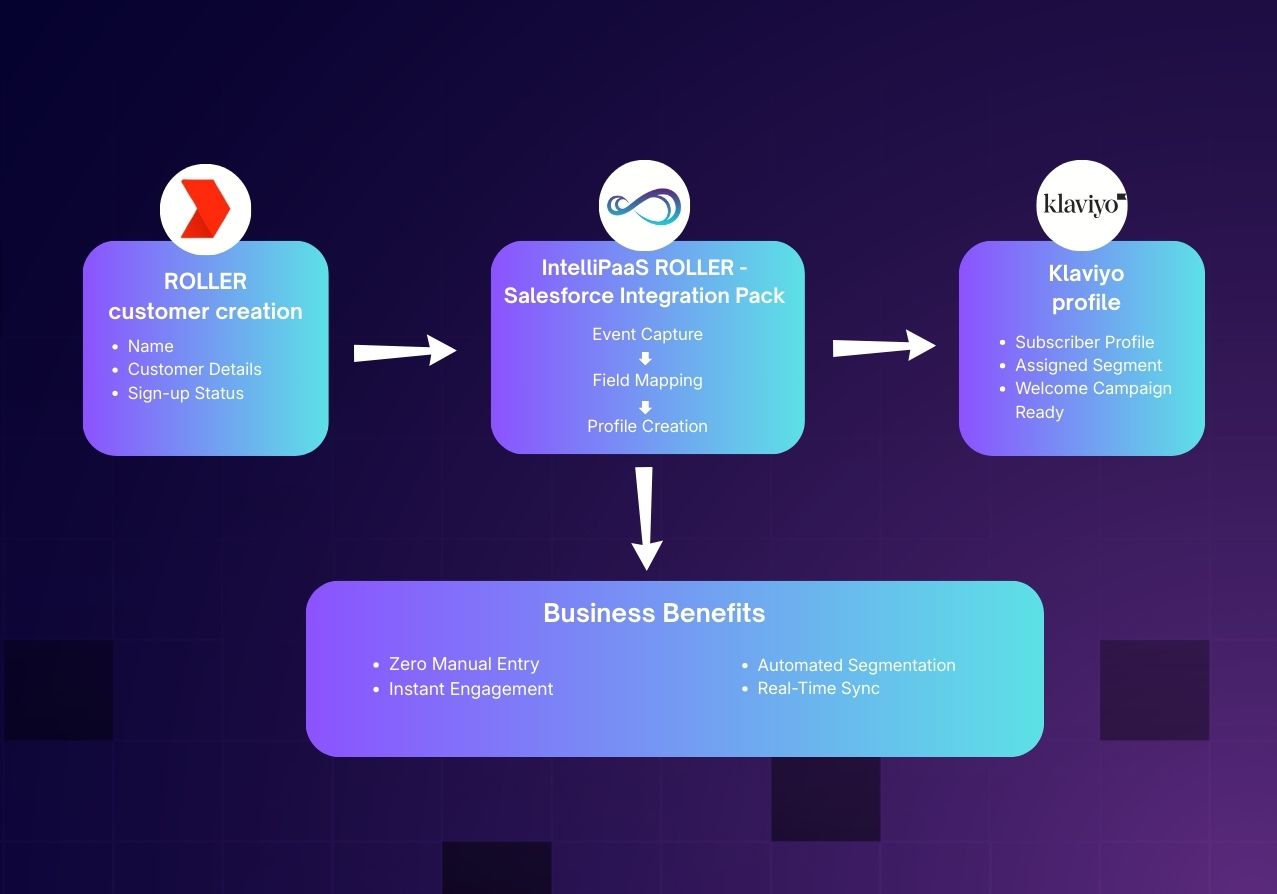

IntelliPaaS provides the integration and governance backbone to make compliance operational-not theoretical.

- On-Premises Deployment: Deploy your AI workflows entirely within Korea, ensuring no data leaves the country. This eliminates data residency exposure and positions you as fully compliant with Korean data sovereignty expectations.

- 100% Audit Trails: Every data point that enters your AI system is logged. Every transformation applied to that data is recorded. Every output generated by your AI is tracked. Every downstream use of that output is auditable. When the MSIT comes to investigate, you don't scramble to reconstruct your data flows-you produce complete, timestamped evidence of compliance.

- Complete Model Training Visibility: Know exactly what data was used to train your AI models, how that data was processed, where it came from and how it's being used. This transparency satisfies high-impact AI explainability requirements and enables rapid response to regulator inquiries.

- Human-in-the-Loop by Design: Insert mandatory approval steps into your AI workflows without custom development. IntelliPaaS enables you to require human review and sign-off for any high-impact AI decision, creating the documented human oversight the law demands.

- Integrated Risk Management: Connect your AI systems to your business systems (CRM, ERP, HR, etc.) so that every AI output is contextual, traceable and auditable within your operational workflows. When an AI decision affects a customer or employee, you have complete visibility into the decision pipeline.

Compliance as Competitive Advantage

Companies that proactively implement robust AI governance don't just avoid penalties-they build customer trust and regulatory relationships that strengthen market position. Customers increasingly demand transparency and accountability from AI systems. Regulators increasingly favor companies that demonstrate good-faith compliance efforts.

IntelliPaaS transforms compliance from a cost center into an operational advantage. Your AI workflows become auditable, explainable and defensible. Your documentation becomes automated, not manual. Your risk management becomes embedded, not bolted-on.

The Window for Action Is Now

South Korea's AI law takes effect in 16 days. Companies deploying AI to Korean users must move quickly.

Request a demo to see how IntelliPaaS turns South Korea's compliance requirements into operational strength. See how on-premises deployment, complete audit trails and integrated human oversight transform regulatory risk into competitive advantage.

.svg)